I can’t open that either

- 2 Posts

- 63 Comments

2·1 day ago

2·1 day agoIn my experience, if I don’t salt the hell out of the water it’ll end up bland. That’s just what I found through trial and error

That’s some heavy-duty conjecture. Lots of birds will eat anything

Mine was $20 from a thrift store, plus maybe $40 in parts. Also a pos, but it’s a pos that will get me there

4·2 days ago

4·2 days agoI just did a study with a sample size of 1 and the results are in: doesn’t work

We have functional agency

Is this scientifically provable? I don’t see how this isn’t a subjective statement.

Artificial intelligence requires agency and spontaneity

Says who? Hollywood? For almost a hundred years the term has been used by computer scientists to describe computers using “fuzzy logic” and “learning programs” to solve problems that are too complicated for traditional data structures and algorithms to reasonably tackle, and it’s really a very general and fluid field of computer science, as old as computer science itself. See the Wikipedia page

And finally, there is no special sauce to animal intelligence. There’s no such thing as a soul. You yourself are a Rube Goldberg machine of chemistry and electricity, your only “concepts” obtained through your dozens of senses constantly collecting data 24/7 since embryo. Not that the intelligence of today’s LLMs are comparable to ours, but there’s no magic to us, we’re Rube Goldberg machines too.

That’s just kicking the can down the road, because now you have to define agency. Do you have agency? If you didn’t, would you even know? Can you prove it either way? In any case, this is no longer a scientific discussion, but a philosophical one, because whether or not an entity has “intelligence” or “agency” are not testable questions.

These systems do not display intelligence any more than a Rube Goldberg machine is a thinking agent.

Well now you need to define “intelligence” and that’s wandering into some thick philosophical weeds. The fact is that the term “artificial intelligence” is as old as computing itself. Go read up on Alan Turing’s work.

2·4 days ago

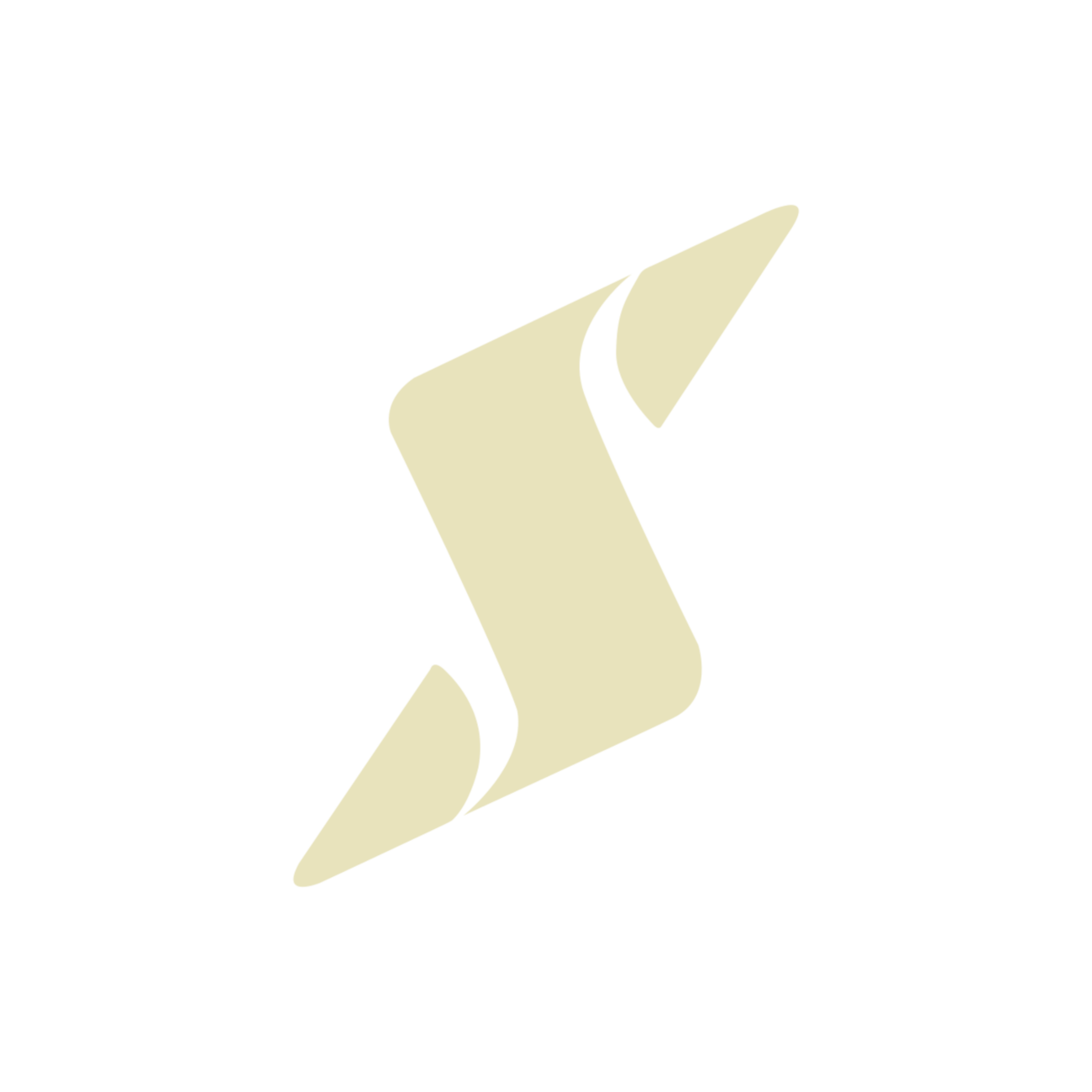

2·4 days agoI’ve never built or trained an LLM, but I did get to play around with some smaller neural networks. My neural networks professor described this phenomenon as “overfitting”: When a model is trained too long on a dataset that’s too small for the model, it will sort of cheat by “memorizing” arbitrary details in the training dataset (flaws in the image like compression artifacts, minor correlations only coincidentally found in the training dataset, etc) to improve evaluation performance on the training dataset.

The problem is, because the model is now getting hung-up analyzing random details of the training dataset instead of the broad strokes that actually define the subject matter, the evaluation results on the validation and testing datasets will suffer. My understanding is that the effect is more pronounced when a model is oversized for its data because a smaller model wouldn’t have the “resolution” to overanalyze like that in the first place

Here’s an example I found from my old homework of a model that started to become overfit after the 5th epoch or so:

By the end of the training session, accuracy on the training dataset is pushing the high 90%'s, but never broke 80% on validation. Training vs validation loss diverged even more extremely. It’s apparent that whatever the model learned to get that 90-something percent in training isn’t broadly applicable to the subject matter, so this model is considered “overfit” to its training data.

I used to get this all the time when I was really young, like less than 8, but I’m an adult now and haven’t had it happen since then at all. I wonder why?

Mutual I’m sure

1·6 days ago

1·6 days agoI think the matrix would be a great one. I have no idea why it’s rated r

I don’t speak or read German but every once in a while a meme will have a critical mass of words I understand because of their proximity to English, and the German 100% amplifies the meme.

1·6 days ago

1·6 days agoIf anything, to me it seems more important for a slower language to be optimized. Ideally everything would be perfectly optimized, but over-optimization is a thing: making optimizations that aren’t economical. Even though c is many times faster than python, for many projects it’s fast enough that it makes no practical difference to the user. They’re not going to bitch about a function taking 0.1 seconds to execute instead of 0.001, but they might start to care when that becomes 100 seconds vs 1. As the program becomes more time intensive to run, the python code is going to hit that threshold where the user starts to notice before c, so economically, the python would need to be optimized first.

Can’t have a good night, there’s no power because windmills only work in the day! smh

It’s great for verbose log statements

Hey I resemble that remark

Same, I’m the person in the meme. I don’t get useful energy from caffeine just stress. Soda and tea are fine, but I try to stay away from black coffee and energy drinks because they tend to trigger panic attacks in me.